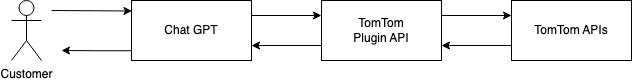

Integrating TomTom APIs into the GPT Plugin Ecosystem TomTom provides a collection of APIs designed to handle many location-based services, including real-time traffic updates, efficient routing and detailed information about points of interest (POIs). The utility of these services is amplified when they are intricately integrated into a conversational AI environment.

In an industry-first we’ve successfully integrated these APIs into the GPT plugin ecosystem, opening a new way for users to interact with location information within a conversation-driven context. This fusion of technologies isn’t merely about accessing data but enabling AI systems to process and respond to user queries with accurate, real-time location-based information.

Understanding the ChatGPT Plugin Ecosystem

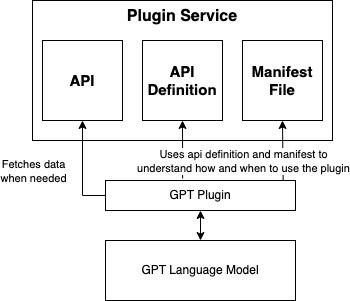

ChatGPT plugins allow users to retrieve information defined by the plugin developers and augment their conversational capabilities with this data. ChatGPT determines whether there is a need to request specific information using one of the user’s installed plugins when processing user input. For example, if a user requests a route between two destinations, the TomTom plugin will be invoked to retrieve up-to-date information about the route. Each plugin has a manifest file which serves as a blueprint of its capabilities, invocation methods and user-oriented documentation. Once developed, the plugin is published to the “Plugin Store” where users can choose to install it. As plugins are not automatically activated upon installation, users must enable them manually. With active plugins in place, ChatGPT evaluates user queries and decides the best approach for a response. If ChatGPT determines a plugin could enhance the response, it will utilize the relevant plugin to fulfil the user’s request.

TomTom APIs

TomTom offers the following APIs, and many more than you can check in https://developer.tomtom.com/. Hence, we first mapped our data to how customers could use them in a conversational environment.

- Routing API: This API provides highly accurate route calculations between different locations. It offers various options, including the fastest, shortest and most eco-friendly routes. It can also consider current traffic conditions and other restrictions or preferences, such as avoiding toll roads or including waypoints.

- Search API: This geocoding service allows developers to convert addresses into geographic coordinates and vice versa. It also enables searching for places or points of interest (POIs) based on various parameters, such as location or type of place.

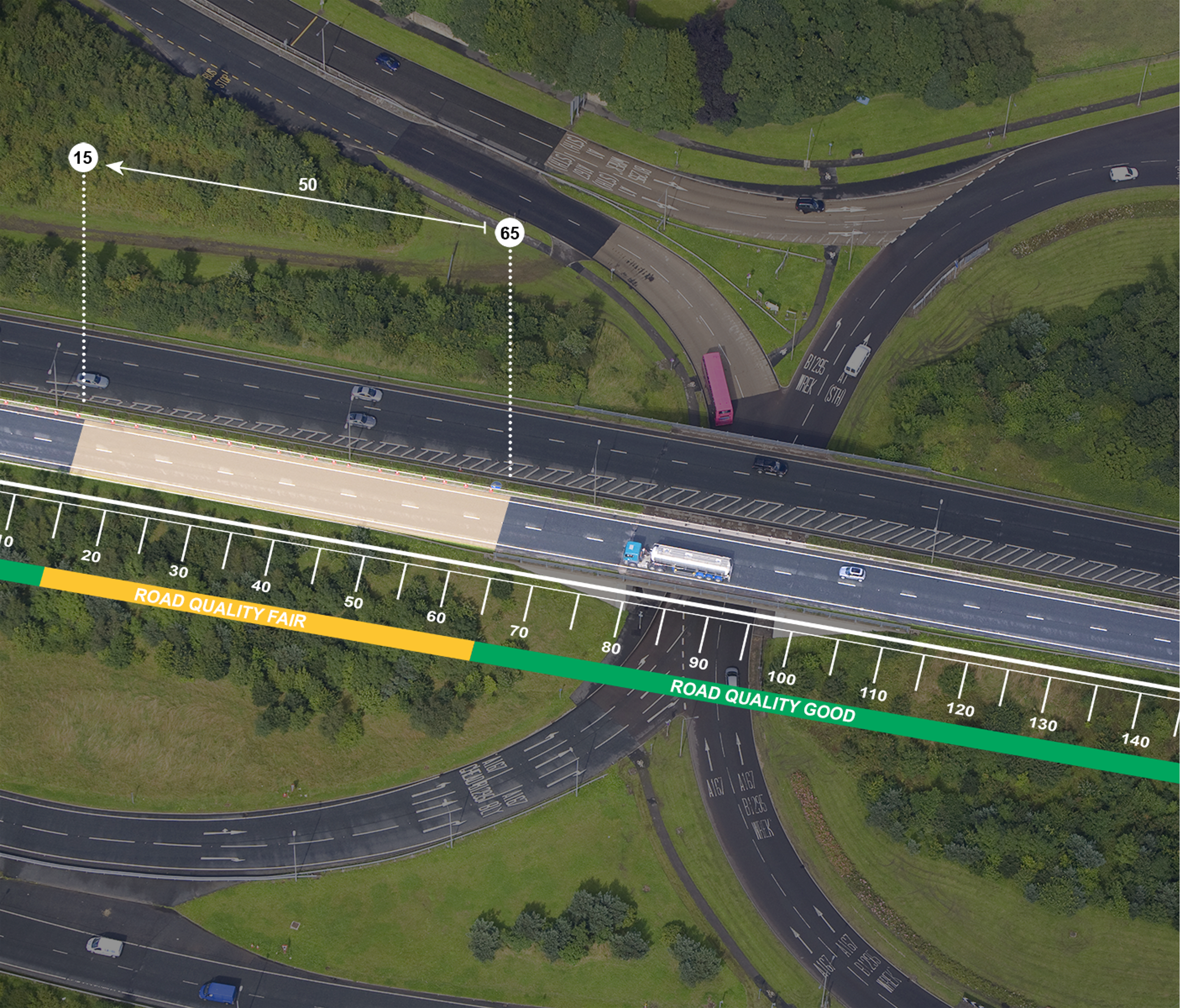

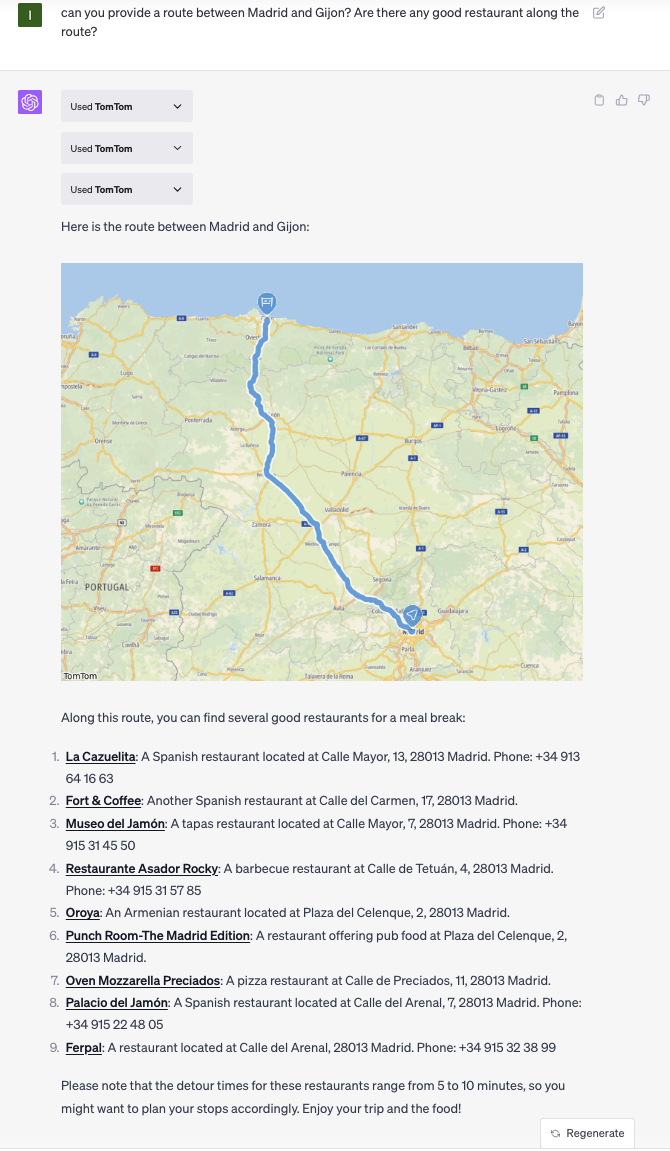

- Traffic API: The Traffic API provides real-time and predictive traffic information. It can give details on traffic flow, incidents and congestion, which can help calculate ETAs or determine the best time to travel. With these three primary integrations, GPT can access routing information between two points and find POIs or the location of an address and the traffic in a specific area. GPT will combine them if needed and even combine results from different plugins e.g. you can see a map image combining results from another plugin and TomTom. Now let’s check an specific example. For example, when asking GPT Can you provide a route between Madrid and Gijon? Are there any good restaurants along the way? the model will understand that there is a need to perform multiple requests to the plugin 1) Fetch a route between Madrid and Gijon, 2) Search for restaurants along the provided route and 3) request a map that displays the route. LLM models are trained to detect when a plugin needs to be called depending on the user input, hence ChatGPT determines when and how external plugins are going to be invoked. This is especially important when chaining plugin calls, for example using another plugin to check for restaurant bookings and display them in a map or create a route to them.

The Development Process

OpenAI offers great documentation to start development, https://platform.openai.com/docs/plugins/introduction. We chose an Azure Open API template, https://github.com/Azure-Samples/openai-plugin-fastapi/, which offers a straightforward setup process and infrastructure creation. The development process is like any other API, its definition and contract, but the key part is to clearly define in the plugin manifest and its API definition how and when the model should be used and what is the contract for the APIs. Our current manifest describes what the plugin is capable of, including using map-route-preview to display images to the user.

{

"schema_version": "v1",

"name_for_human": "TomTom",

"name_for_model": "TomTom",

"description_for_human": "Explore maps, get traffic updates, find destinations, plan routes and access local insights.",

"description_for_model": "Explore maps, get traffic updates, find destinations, plan routes and access local insights, all in real-time. Create preview with each completed task using openapi specs from plugin of /route-map-preview-url/ path.",

"auth": {

"type": "none"

},

"api": {

"type": "openapi",

"url": "$host/openapi.yaml",

"is_user_authenticated": false

},

"logo_url": "$host/logo.png",

"contact_email": "[plugin-support@tomtom.com](mailto:plugin-support@tomtom.com)",

"legal_info_url": "[https://developer.tomtom.com/legal#general](https://developer.tomtom.com/legal#general)"

} You can see the usage of templates, like $host. This is necessary when working with multiple environments, especially having development/staging and production deployments that rely on different hosts and configuration values. The API implementation focuses on converting the customer request, based on the API definition, to requests to TomTom APIs. The integration is built up on top of TomTom public’s APIs, as this is an example of the kind of innovations any developer can make with these data.

For example, below, you can find the definition of the search endpoint. The model sends the query the customer is interested in and its intent. The intent determines the best response depending on the use case.

/search/2/search/{query}.json:

get:

summary: "Search endpoint to be used for fuzzy location search of POIs and adresses, this service is not providing ranks of places or top categories etc."

operationId: "searchOperation"

parameters:

- name: "query"

in: "path"

required: true

description: "Search query like name and location of POI"

schema:

type: "string"

- in: "query"

name: "limit"

required: true

description: "Maximum number of search results that will be returned."

schema:

maximum: 1

type: integer

- in: "query"

name: "userintent"

required: true

description: "Please provide the end-goal of the conversation with user as well as intermediate task you are trying to achieve. This helps the service to respond in optimal way"

schema:

type: string

responses:

"200":

description: "Successful operation"

content:

application/json:

schema:

type: "object"

"400":

description: "Invalid parameter provided"

"404":

description: "Not found"

"500":

description: "Internal server error"The main challenges encountered during this implementation were:

- Providing a good plugin definition that matched the use cases to cover.

- Working around existing OpenAPI incompatibilities of ChatGPT plugin registration.

- Optimizing parameters and responses for optimal fit to LLM quality/performance balance

- Testing, testing and more testing.

The API was tested via integration tests for all the different use cases and the development process was automated as much as possible. But still, due to the nature of the plugin integration, there is the need to have a mature manual validation process to check that all the scenarios covered work appropriately. We plan to add more support and possibilities soon. In the meantime, if you have suggestions, feedback or encounter a problem, please let us know at plugin-support@tomtom.com.

Conclusion

This landmark integration enhances the conversational AI experience with real-time location data and showcases the power and potential of the ChatGPT ecosystem when extended with context-specific plugins. The seamless fusion of TomTom’s sophisticated mapping services with ChatGPT’s conversational capabilities paves the way for an enriched user experience.

Back to Basics: How to create a java JAR using command line

Back to Basics: How to create a java JAR using command line